Researchers Design Novel Reaction Description Language for Encoding Molecular Editing Operations in Chemical Reactions

Artificial intelligence technologies, represented by large language models (LLMs), have achieved unprecedented breakthroughs in natural language processing, influencing paradigm of scientific research. In the fields of chemistry and pharmaceuticals, the concept of chemical language models (CLMs) has emerged, which handle chemical molecules and reactions. CLMs capitalize on chemist-defined molecular linear notations to learn and generate molecular structures. The most commonly used molecular linear notation is Simplified Molecular Input Line Entry System (SMILES).

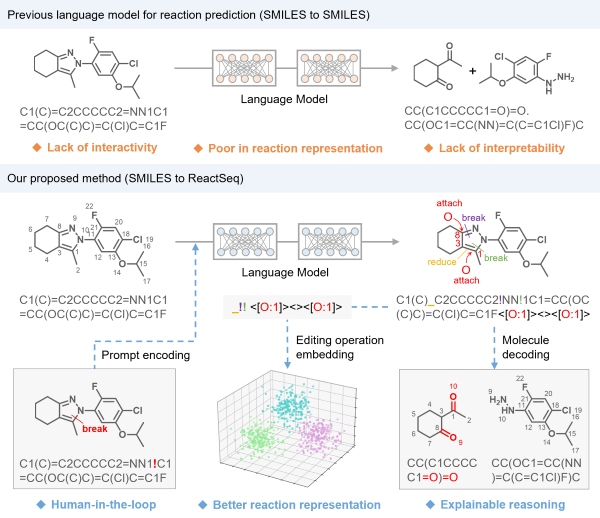

Recently, some new molecular linear notations have been designed to enhance the performance of CLMs in specific tasks. However, these notations are all designed to describe the static structures of chemical molecules. They cannot explicitly describe the crucial aspect of chemistry, namely process of atom and bond changes in molecules during a chemical reaction. This significantly restricts the application of LMs in chemical reaction prediction and representation.

In a study published in Nature Machine Intelligence on May 13, a research team led by ZHENG Mingyue from the Shanghai Institute of Materia Medica of Chinese Academy of Sciences, designed a new reaction description language called ReactSeq.

Inspired by retrosynthesis process, ReactSeq defines both product structure and the molecular editing operations (MEOs) required to transform it back into reactant molecules. These MEOs include breaking and changing of chemical bonds, alterations in atomic charges, and attachment of leaving groups (LGs), among others. In a ReactSeq-based retrosynthesis LM, reactants are not generated token-by-token from scratch. Instead, they are transformed from product molecule through these MEOs, ensuring precise atom mapping between predicted reactants and products, and thereby enhancing the model's interpretability.

Utilizing ReactSeq, a vanilla Transformer can achieve state-of-the-art performance in retrosynthesis prediction. ReactSeq also features explicit tokens denoting MEOs, enabling the encoding of human instructions. Moreover, expert prompts can significantly enhance the model's performance and even guide it in exploring new reactions. These tokens also benefit extraction of reaction representations. Compared to aggregating the embeddings of entire ReactSeq, focusing on the embeddings of these MEO tokens can yield more faithful and intrinsic reaction representations.

Utilizing this strategy and self-supervised learning, the research team developed a universal and reliable reaction representation. This representation has proven effective across multiple tasks, including reaction classification, similar reaction retrieval, reaction yield prediction, and experimental procedure recommendation.

This study introduced a novel chemical language that endows domain-specific LLMs with several emergent capabilities. This advancement significantly enhances the ability of natural language processing models to tackle complex chemical problems and provides a new direction for the development of foundational models in chemical artificial intelligence.

DOI: 10.1038/s42256-025-01032-8

Link: https://doi.org/10.1038/s42256-025-01032-8

A comparison between the previous SMILES-based language model for reaction prediction and the ReactSeq-based method (Image by ZHENG’s lab)

Contact:

JIANG Qingling

Shanghai Institute of Materia Medica, Chinese Academy of Sciences

E-mail: qljiang@stimes.cn